Kubernetes, an open-source container orchestration technology, automates the deployment, scaling, and maintenance of containerized applications. It’s also known as “K8s.”

It was created by Google and is now managed by the Cloud Native Computing Foundation (CNCF).

One of the key concepts of it is the use of “containers.” Containers are a lightweight form of virtualization that allows developers to package an application and its dependencies together in a single package.

This package, called a container image, can then be run on any machine that supports the container runtime.

It provides a number of features for managing and scaling containerized applications, including:

Automated container resizing based on resource use

Self-healing, which automatically replaces or restarts containers that fail

Rolling updates, which allow for updating containerized applications without downtime

Service discovery and load balancing, which allows for communication between containers and external clients

It also provides a number of abstractions that allow for the management of containerized applications at a higher level. These abstractions include:

Pods, which are the basic building block of Kubernetes and represent a single instance of a containerized application

Replication Controllers ensure that a certain number of replicas of a pod are always operating.

Services, which provide a stable endpoint for communicating with a set of pods

Ingress, which provides external access to the services in a cluster

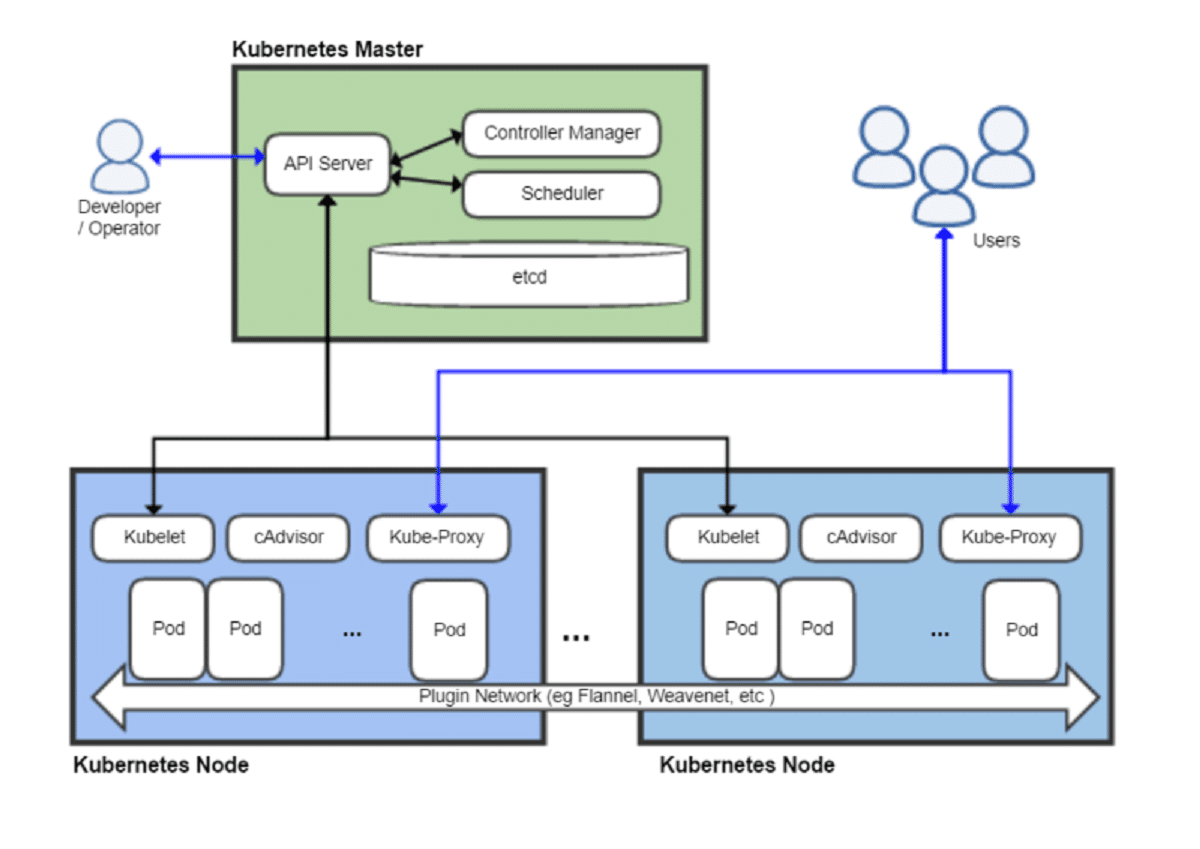

This architecture is based on a master-slave architecture, where the master node is responsible for managing the state of the cluster and the slave nodes (also known as worker nodes) are responsible for running the containers.

The master node includes several components, including the API server, which exposes the its API, the etcd, a distributed key-value store that stores the configuration data for the cluster, and the controller manager and scheduler, which handle the scheduling and management of pods.

The slave nodes run the kubelet, which communicates with the master node and manages the containers running on that node, and the kube-proxy, which handles network communication between pods.

It is an open-source container orchestration solution for containerized application deployment, scalability, and administration.

It provides a number of features for managing and scaling containerized applications and abstractions for higher-level management.

Its architecture is based on a master-slave architecture, with the master node responsible for managing the state of the cluster and the slave nodes responsible for running the containers. It is widely used and is supported by a number of cloud providers, making it a great option for managing containerized applications in production.

Understanding the Basics of Container Orchestration

It is an open-source container orchestration technology that aids in the automation of containerized application deployment, scaling, and management.

It was created by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

At its core, It is designed to manage and orchestrate containers, which are lightweight, portable, and self-contained environments that package an application’s code and dependencies. Containers make it easy to run applications consistently across different environments, from development to production.

Here are some of the basic concepts and components of Kubernetes:

Nodes:

These are the individual machines that make up a Kubernetes cluster. Each node runs a container runtime, such as Docker, and is managed by its control plane.

Pods:

Pods are Kubernetes’ smallest deployable components. They consist of one or more containers that share the same network namespace and storage volume. Pods are designed to be ephemeral, meaning they can be easily created and destroyed as needed.

Services:

Services give a consistent IP address and DNS name for a collection of pods. They allow other applications and services to access a group of pods as if they were a single entity.

Replication Controllers:

Replication controllers ensure that a certain number of pod replicas are always active.

Deployments:

Deployments provide a higher-level abstraction for managing pods and replication controllers. They allow you to easily roll out updates and rollbacks to your application.

ConfigMaps:

ConfigMaps allow you to decouple configuration data from your application code. They provide a way to manage configuration data as key-value pairs and can be mounted as volumes in a pod.

Secrets:

Secrets provide a way to securely store sensitive information, such as passwords and API keys. They are stored as encrypted data and can be mounted as volumes in a pod.

It also has a powerful API that allows you to automate tasks and integrate with other tools and systems.

It is an effective framework for managing containerized applications at scale.

Setting up a Cluster and Deploying Applications

To set up a Kubernetes cluster on AWS, you can use the Amazon Elastic Kubernetes Service (EKS), which is a fully managed Kubernetes service. Here are the basic steps to set up a cluster and deploy applications:

Set up an Amazon EKS cluster:

You can create an EKS cluster using the AWS Management Console, the AWS CLI, or an AWS CloudFormation template. You will need to specify the cluster name, the VPC and subnets to use, and the IAM role that its control plane and worker nodes will use.

Launch worker nodes:

Once the EKS cluster is created, you can launch worker nodes using an Amazon Machine Image (AMI) that is preconfigured with the necessary components. You can use the AWS Management Console, the AWS CLI, or an AWS Cloud Formation template to launch the worker nodes.

Connect to the cluster:

Once the worker nodes are running, you can use the kubectl command-line tool to connect to its cluster. You will need to download the kubeconfig file from the EKS console and set the KUBECONFIG environment variable to point to it.

Deploy applications:

You can deploy applications to its cluster using its manifests, which are YAML files that define the desired state of the application. You can use this to create, update, and delete the resources defined in the manifest.

Scale and update applications:

You can scale and update applications by modifying the Kubernetes manifest and using this to apply the changes. It will automatically manage the deployment and scaling of the application based on the desired state defined in the manifest.

Monitor and troubleshoot:

You can monitor the health and performance of its cluster and the applications running on it using the Kubernetes Dashboard or third-party tools such as Prometheus and Grafana. You can also troubleshoot issues using commands and logs.

Overall, setting up a Kubernetes cluster on AWS using EKS provides a scalable and reliable platform for deploying and managing containerized applications.

Kubernetes and Machine Learning: How to Deploy and Scale ML Models

It is a powerful platform for deploying and scaling machine learning models. Here are the basic steps to deploy and scale ML models:

Prepare the model:

Train and test the machine learning model and prepare it for deployment. This involves packaging the model and its dependencies into a containerized image.

Create a Kubernetes deployment:

Use a Kubernetes deployment to create a set of replicas of the model container, and to specify the desired state of the deployment. This includes the number of replicas to be created, the container image to be used, and any other relevant configuration.

Create a Kubernetes service:

Use its service to expose the deployment to the network, and to provide a stable IP address and DNS name for the deployment.

Scale the deployment:

Use its scaling mechanisms to scale the deployment up or down based on demand. This can be done manually or automatically, based on metrics such as CPU utilization or the number of requests.

Monitor and troubleshoot:

Use its monitoring and logging tools to monitor the health and performance of the deployment, and to troubleshoot any issues that arise.

Update the deployment:

Use its rolling updates to update the deployment with new versions of the model, or to make other changes to the deployment configuration.

By using it to deploy and scale machine learning models, you can take advantage of the platform’s built-in scaling and orchestration capabilities, and ensure that your models are running efficiently and reliably.

Additionally, by using containerization to package the model and its dependencies, you can easily move the deployment between different environments, such as development, staging, and production.

A Guide to Managing Clusters Across Different Clouds

Developers may start, grow, and manage containerized applications using Kubernetes, an open-source container orchestration technology.

It has become a popular choice for managing applications in the cloud due to its ability to automate many of the manual tasks associated with deploying and scaling applications.

As more and more organizations move towards multi-cloud strategies, where they use multiple cloud providers for different workloads, the ability to manage its clusters across different clouds becomes increasingly important.

Here are some best practices for managing its clusters across different clouds:

Use a Multi-Cloud Management Tool:

There are several multi-cloud management tools available that can help you manage its clusters across different clouds. These tools provide a unified view of all your clusters and allow you to manage them from a single dashboard.

Use a Consistent Kubernetes Distribution:

It’s important to use a consistent distribution across all your clouds to ensure consistency in your deployment and management practices. This will also make it easier to move workloads between clouds if necessary.

Use a Single Sign-On Solution:

Using a single sign-on solution will make it easier for users to access all your Kubernetes clusters regardless of which cloud they are deployed in. This will also make it easier to manage access control and security across all your clusters.

Use Cloud-Native Services:

Take advantage of cloud-native services that are specific to each cloud provider. For example, AWS provides Elastic Load Balancer (ELB) and Azure provides Azure Load Balancer. Using these services will ensure optimal performance and availability for your applications.

Use a Consistent Network Topology:

Ensure that the network topology across all your clouds is consistent. This will make it easier to manage and troubleshoot your clusters across different clouds.

Use a Consistent Monitoring and Logging Solution:

Top best kubernetes tools and service providers

There are many Kubernetes tools and service providers available, but here are some of the most popular and widely used options:

Kubernetes Tools:

kubectl:

The command-line tool for managing Kubernetes clusters and resources.

Helm:

A Kubernetes package manager that aids in the installation and management of apps.

Prometheus:

A monitoring and alerting system for it that provides metrics and insights into your cluster’s health and performance.

Grafana:

A visualization tool that works with Prometheus to provide interactive dashboards and graphs.

Kubernetes Service Providers:

Amazon Elastic Kubernetes Service (EKS):

Amazon Web Services offers a fully managed Kubernetes service (AWS).

Google Kubernetes Engine (GKE):

A fully managed Kubernetes service provided by Google Cloud Platform (GCP).

Microsoft Azure Kubernetes Service (AKS):

Microsoft Azure offers a fully managed Kubernetes service.

DigitalOcean Kubernetes:

A managed Kubernetes service provided by DigitalOcean, with a focus on simplicity and ease of use.

Other notable Kubernetes service providers include IBM Cloud Kubernetes Service,

Red Hat OpenShift, and VMware Tanzu.

These providers offer a range of features and services, including managed Kubernetes clusters, container registries, and container security tools.

Ultimately, the choice of Kubernetes tools and service providers will depend on your specific needs and requirements.

It’s important to evaluate each option carefully and consider factors such as cost, scalability, ease of use, and compatibility with your existing infrastructure and workflows.

Q&A on Kubernetes

Q: What is Kubernetes?

A: The deployment, scaling, and maintenance of containerized applications are all automated by the open-source container orchestration technology known as Kubernetes.

Q: What are the benefits of using Kubernetes?

A: Some of the benefits of using Kubernetes include automatic scaling, service discovery, load balancing, and fault tolerance.

It also provides a flexible and robust platform for managing containerized workloads in production environments.

Q: How does Kubernetes work?

A: It works by providing a set of APIs and tools for managing containerized applications.

It uses a declarative approach to configuration, allowing developers to define the desired state of their application, and it takes care of the rest, ensuring that the actual state matches the desired state.

Q: What are some common use cases for Kubernetes?

A: It is commonly used for deploying and managing microservices-based applications, as well as for building scalable and resilient web applications. It can also be used for managing batch processing workloads, machine learning models, and IoT applications.

Q: What are some key components of Kubernetes architecture?

A: Some key components of its architecture include the API server, etcd, kubelet, and kube-proxy.

The API server provides the Kubernetes API, It is a distributed key-value store used for configuration data, kubelet runs on each node and manages the containers, and kube-proxy handles networking between services.

Q: How can I learn Kubernetes?

A: There are many resources available for learning Kubernetes, including online tutorials, documentation, and training courses. Some popular Kubernetes learning resources include the official Kubernetes documentation, the Kubernetes Fundamentals course on edX, and the Kubernetes Bootcamp course on Udemy.

Q: Is It difficult to manage?

A: It can be complex and challenging to manage, especially for organizations with limited resources or expertise.

To use Kubernetes effectively, it is essential to have a solid understanding of containerization and networking concepts, as well as a comprehensive understanding of its architecture and best practices.

However, there are also managed its services available from cloud providers, which can help simplify management.

Conclusion

The deployment, scaling, and maintenance of containerized applications are made simpler by the use of Kubernetes, a strong and well-liked container orchestration system.

It provides a flexible and robust platform for managing containerized workloads in production environments, offering features such as automatic scaling, service discovery, load balancing, and fault tolerance.

It also provides a rich set of APIs and tools for managing containerized applications, making it easier for developers and operations teams to collaborate and streamline their workflows.

Its open-source nature and large community make it an attractive choice for organizations looking to adopt cloud-native technologies and build modern, scalable applications.

However, It can also be complex and challenging to manage, especially for organizations with limited resources or expertise.

To use this effectively, it is essential to have a solid understanding of containerization and networking concepts, as well as a comprehensive understanding of its architecture and best practices.

Overall, It offers significant benefits to organizations looking to deploy and manage containerized applications at scale, but it also requires careful planning, management, and expertise to realize its full potential.

Note: The information in this article is based solely on information found on the internet and does not come from any private sources.